Susie Hargreaves OBE to leave IWF after 13 years’ 'distinguished service'

After 13 successful years at the helm of the Internet Watch Foundation (IWF), Susie Hargreaves OBE is leaving to take up a new opportunity.

In her final report in the Independent Inquiry into Child Sexual Abuse (IICSA), Professor Alexis Jay OBE, the inquiry’s chair, calls for plain and clear language to be used when talking about child sexual abuse. She said in her opening statement to the final report:

We also need to use the correct words to describe the actions of abusers – masturbation, anal and oral rape, penetration by objects – these words are still not considered acceptable terms by many in public and private discourse. Every incident of abuse is a crime and should not be minimised or dismissed as anything less, or downplayed because descriptions of the abuse might cause offence.

She is right. And it is thanks to her remarks that IWF has decided to make this data publicly available, and to describe what we’re seeing as accurately as we can.

If we cannot be brave enough to use the right words to describe the true horror of what children are coerced to do, how can we expect children to be brave enough to use the right words and to talk to trusted adults about what is happening to them online?

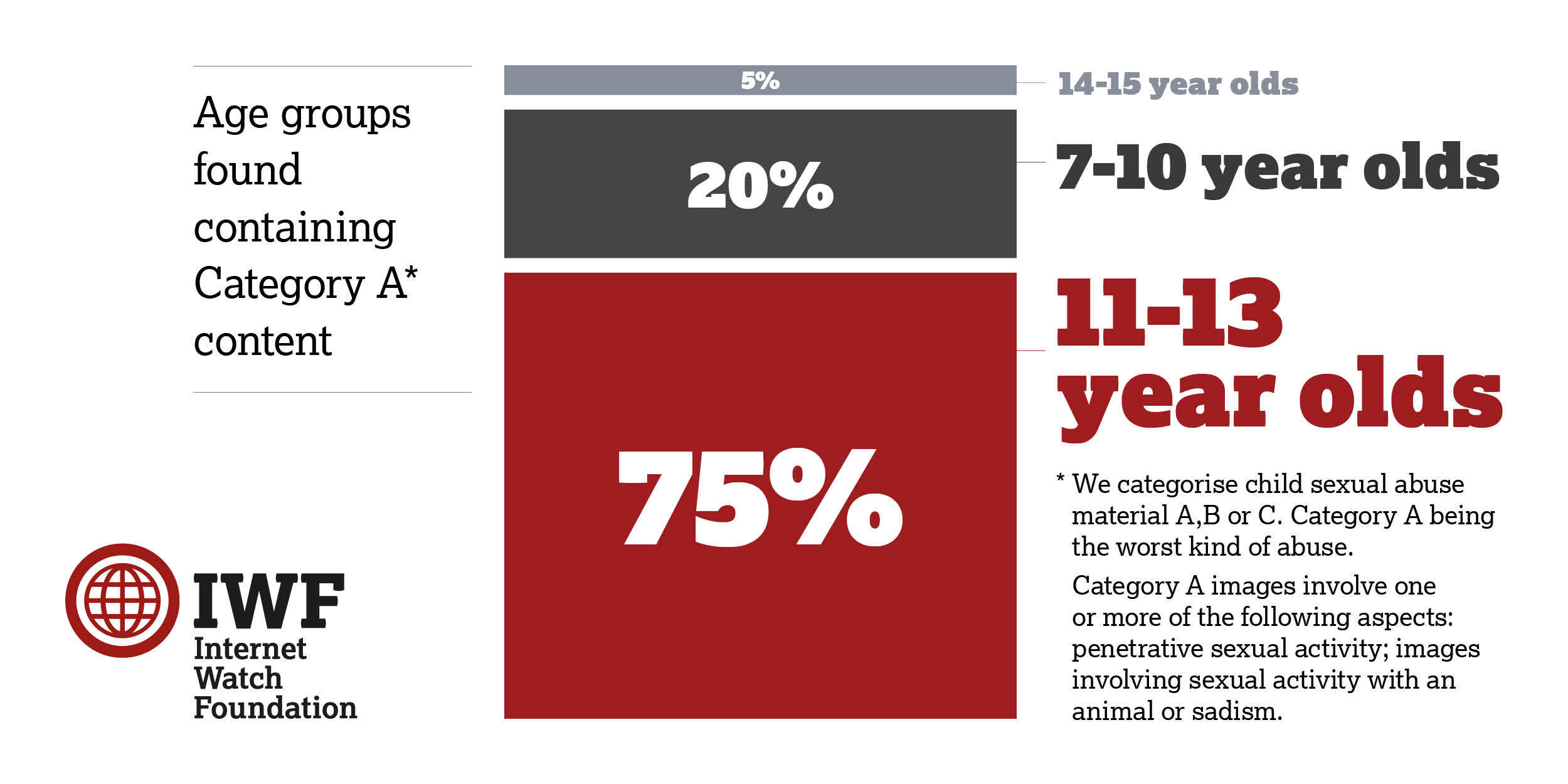

The IWF has been tracking an increase in ‘self-generated’ data of 7- to 10-year-old children. We’ve also published data over many years relating to an increase in the amount of ‘self-generated’ child sexual abuse material in the form of studies, and the IWF annual report.

We assess child sexual abuse material according to the levels detailed in the Sentencing Council's Sexual Offences Definitive Guideline. The Indecent Photographs of Children section (Page 34) outlines the different categories of child sexual abuse material.

In the spring of 2022, an IWF analyst assessed a video of a young girl who was around seven or eight years old. She had been recorded while playing with her doll and lying on her bed. An online abuser appeared to instruct her to do a multitude of inappropriate acts, including penetrating herself and masturbating with the handle of a large sharp knife. Even to our analysts, whose resilience to this material is high, it was viewed as a truly horrific crime. This, coupled with questions to IWF about how a child, on their own (in most cases), could be seen in ‘penetrative sexual activity’, led to this study.

When a child is engaged in any type of sexual activity either alone or with a perceived peer on webcam, it could be understood that this is due to sexual exploration, however this is not reflective of what our analysts see in their work.

Data were collected between 16 and 22 June 2022 – five working days.

We collated all the Category A child sexual abuse images and videos which fitted the ‘self-generated’ definition. This is child sexual abuse content created using webcams or smartphones and then shared online via a growing number of platforms. In some cases, children are groomed, deceived or extorted into producing and sharing a sexual image or video of themselves by someone who is not physically present in the room with the child.

Category A images involve one or more of the following aspects:

Anything which fitted this definition, within the timeframe allocated, was included in the study.

Whilst the term ‘self-generated’ indicates that the child is creating the content themselves, it is vital to remember that children are being groomed online and instructed to engage in this behaviour. No blame should be placed on the child.

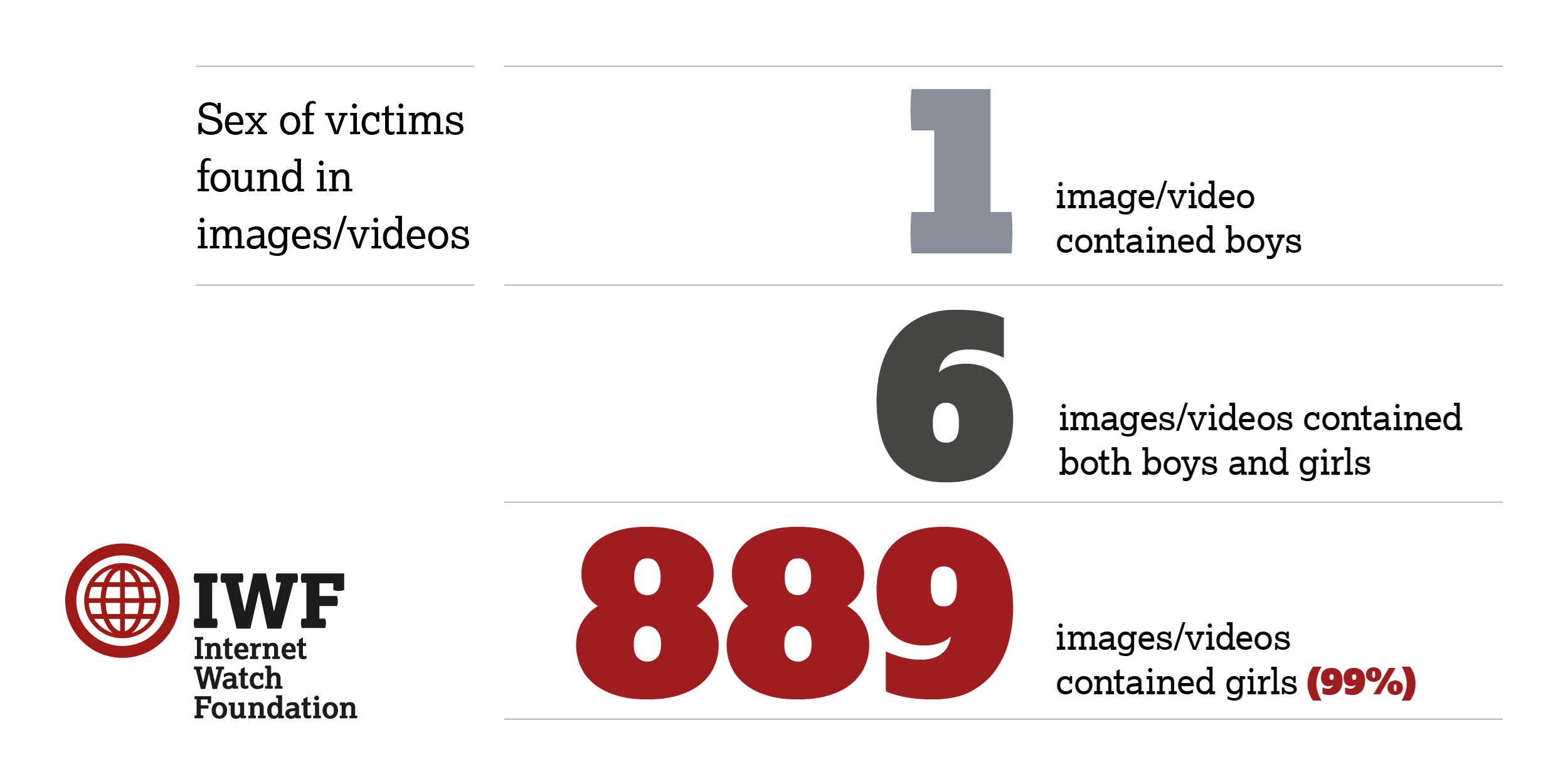

896 instances of online child sexual abuse images or videos were found containing Category A content of a ‘self-generated’ nature.

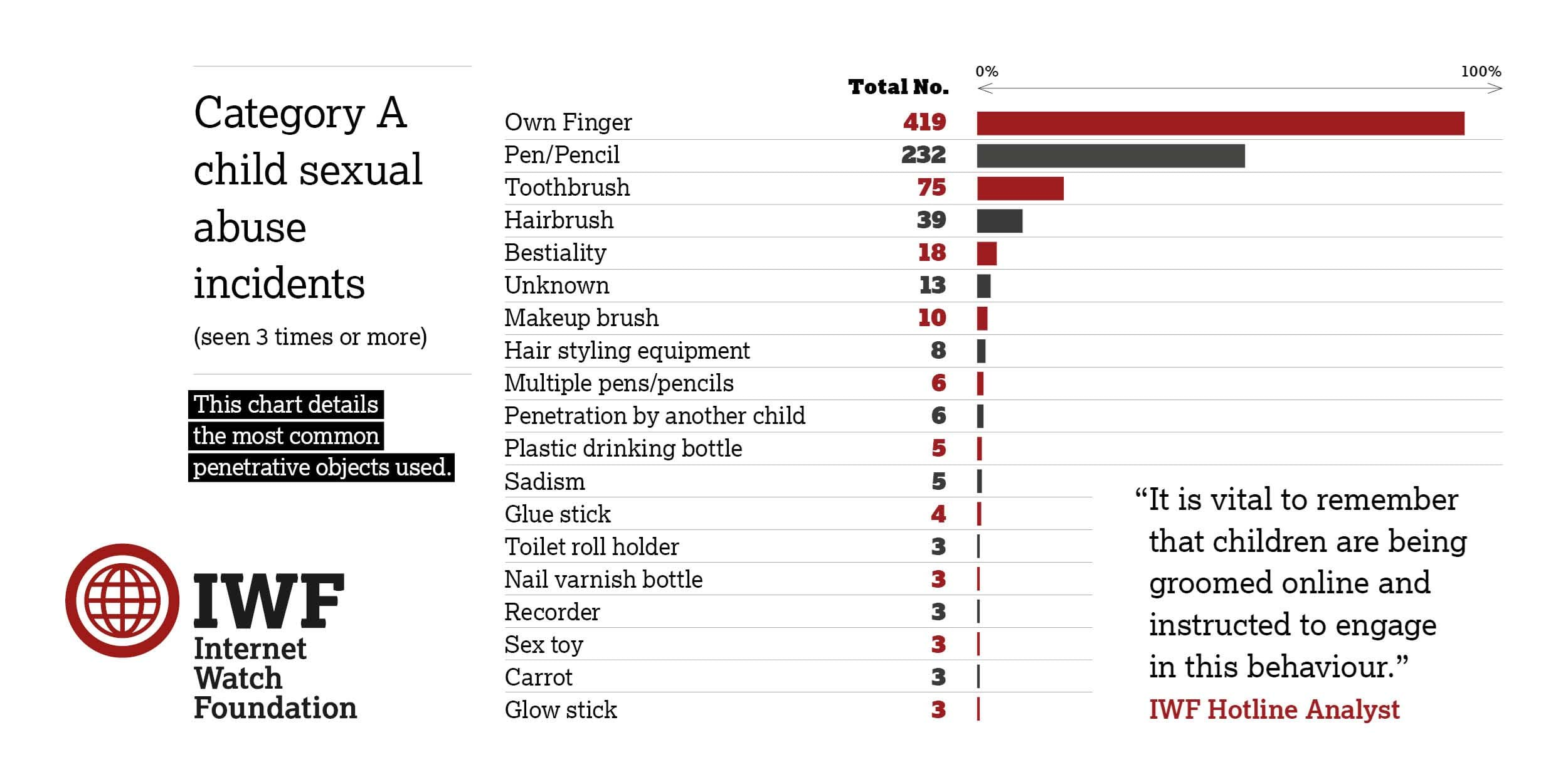

Below is a breakdown of all the objects used for the act of penetration that were seen in the images and videos. The most common object was the child’s own finger (419), followed by a pen or pencil (232), a toothbrush (75), and a hairbrush (39).

Below is a graph showing the most frequent objects/types of abuse that were seen three times or more.

The aim of gathering the data was to record the objects being used for penetration purposes by searching for Category A content. There are, however, more elements to Category A than just penetration, hence images involving bestiality and sadism were found.

Eighteen images accounted for bestiality (the child was involved in sexual activity with what appears to be the family pet). To be clear, in the images showing bestiality, there was no penetration seen. Animals were most often seen licking a child’s genitals.

There were five images which contained sadistic content;

Other obscure objects found in the images included an egg whisk, a lint roller, and a USB cable. Additionally, penetration by another child was seen on six different occasions. There were unknown objects found in the images, which showed penetration taking place but were not clear enough to identify what the object was.

In total there were 69 different variables recorded. Please note there were not 69 different objects, but in some cases the child would use multiple objects within the same image set or video. Due to the way that child sexual abuse images are frequently uploaded and distributed to many locations online, some of the images in this data set will have been repeated. Images are frequently copied and re-posted again using a different URL.

When we’ve assessed that an image or video fails UK law, our aim is to get it removed from the internet as fast as possible.

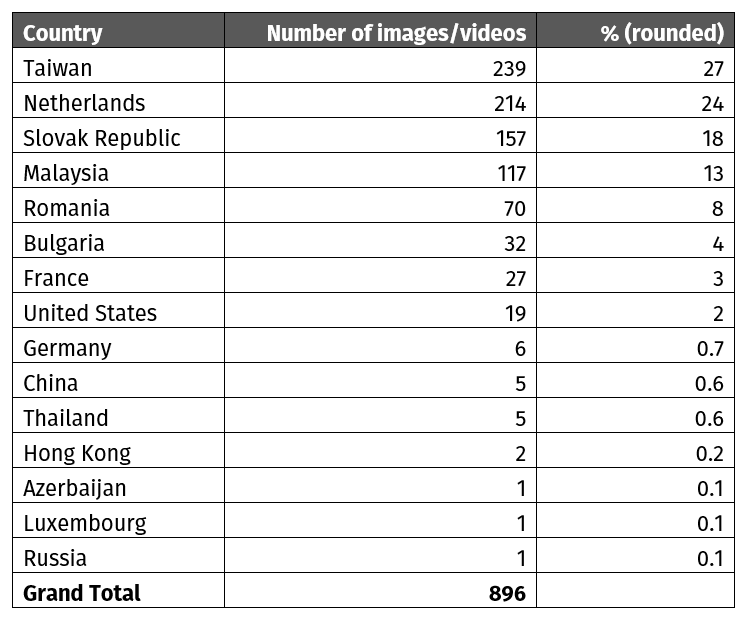

To do this, we perform a trace on the content to identify the physical server that the content is hosted on. This tells us which partners in which country we need to work with. When the content is removed from the physical server – its source – then we can be sure that the image has been removed from any websites or forums, or image boards etc, that could be linking to it.

The table below shows the countries in which the imagery was hosted. To be clear, this does not tell us which country the victim(s) or offender(s) were in, nor from which country the content was uploaded online.

This is a typed extract of a video found in the data. It involves a young girl being directed on webcam.

From her bedroom, a young British girl addresses her watchers and asks them to put any questions they might have in the chat below. She apologises for having to move to a different platform, but she was banned before. She tells the viewers she does not like to use Snapchat as her friends are on there. She is asked her age several times throughout the video and states that she is 14 years old, however looks around 12/13 years old.

Somebody asks her to show her feet and asks her if she can wear white socks, she says, “You have a foot fetish?” and giggles. Somebody tells her she is “Really pretty and hot” and she responds with “Thank you”.

She is asked to show her genitals on camera: “Release your p***y”.

They ask her to follow them back and she replies, “I’ll follow you all back after the live stream. What do you want me to do?” she asks then pauses to read their responses and tells them she needs to close the curtains. She is asked to show her naked body and she takes her shorts and underwear off.

“What else?” she asks, and then bends over and shows her genitals. She says, “Is that better?” and pulls the camera close to her genitals and masturbates and penetrates herself with her finger as instructed. She dances, exposes herself, masturbates and penetrates herself for several more minutes and then asks, “What would you like me to do?” and she penetrates her anus with her finger.

“Are you ready for the final piece?” she asks and shows her breasts whilst dancing and touching herself. After a while she says she must get changed but has a pair of dirty knickers and shows them to the camera. She says, “Try and get this up to 5k” and points to the bottom of the screen.

“I’m 14” she says again, “Sorry I’ve got to go” but says she would stay if she can get 5,000 viewers. She draws the livestream to a close by saying “Don’t forget to send, but I won’t be sending back as I do it on livestream.” She ends the video by thanking her viewers for joining.

The above video is a common example of what the analysts find regularly. The primary concern for this girl is the number of viewers she has and wishes to have. We can only assume that the child has been groomed into behaving this way. Indeed, the text suggests this is not her first time, but rather a common occurrence. It was not revealed in the video how many viewers she had; her target was 5,000 people which is a large number of people to be viewing child sexual abuse material and furthermore be actively asking the child to carry out these acts on camera. Ultimately this girl is being instructed to behave this way and for one purpose - the viewers’ sexual gratification.

This snapshot study was carried out to record the objects that we saw children using for penetration purposes when streaming online with an abuser. It is not uncommon for IWF analysts to encounter this type of child sexual abuse. It is the first time, however, that we have published this sort of detail about penetrative sexual activity. We hope that this helps to inform others’ work: that of other hotlines, policy makers, technology companies, and law enforcement and our partners in the third sector who work tirelessly to protect children online.

Numerous objects were recorded, the most common being the child’s own finger. It could be argued that this behaviour is part of the maturation process to explore sexuality.

However, this is certainly not always the case, especially given that 184 images included 7 to 10 year olds. Furthermore, the video transcript shows that the child was doing this for followers, perhaps likes or incentives. When only a child/children can be seen in the content, it can be easy to forget that there is always someone watching on the other end and often instructing children to carry out these acts.

After 13 successful years at the helm of the Internet Watch Foundation (IWF), Susie Hargreaves OBE is leaving to take up a new opportunity.

IWF warns of more AI-made child sexual abuse videos as tools behind them get more widespread and easier to use

The tools used to create the images remain legal in the UK, the Internet Watch Foundation says, even though AI child sexual abuse images are illegal.

Real victims’ imagery used in highly realistic ‘deepfake’ AI-generated films