An IWF & Omaze Collaboration

We exist to stop child sexual abuse online

Launched in 1996, the UK’s not-for-profit Internet Watch Foundation (IWF) is a global organisation providing high quality data and technical services to prevent the upload, storage, distribution and trade of child sexual abuse imagery around the world. Headquartered in the UK and working internationally, we provide Europe’s largest hotline for combating online child sexual abuse images and videos.

Our highly trained and carefully looked after human analysts have investigated – with ‘eyes on’ – more than 1.6 million reports from the public, police, tech companies, and through their own proactive searching in the past five years alone.

We provide the most accurate trends and data on this issue, nationally and globally, informing threat assessments produced by law enforcement agencies and other global bodies. We proactively seek solutions to be one step ahead of the preparator. This is a local, national and global social and digital emergency. With support from Omaze, we can work together to stop online child sexual abuse.

Latest Updates

IWF Annual Report 2024 launch

Our Annual Report 2024 provides the latest trends and data on the distribution of child sexual abuse images and videos on the internet. It also showcases the amazing work of our Hotline and wider teams. For more information and to watch our launch video please visit here.

Think Before You Share campaign

IWF's Think Before You Share campaign aims to help young people understand the harm of sharing explicit images and videos of themselves, and others, and encourage parents and educators to start timely conversations with children and young people.

Report Remove

We run Report Remove with NSPCC’s Childline, a world-first tool that gives children the power to take back control of their images. This service ensures that children are safeguarded and supported while we assess and act on the content they report.

IWF in the news

IWF worked with ITV News on an exclusive to raise awareness of the tactics of offenders after our analysts discovered a "handbook" to guide abusers looking to exploit children online.

Working together to stop child sexual abuse online

We wish to collaborate with Omaze to see a world where no child is ever sexually abused and then forced to face the very real possibility that a record of their terrible abuse will be shared online.

Shocking Statistics

In 2024, we assessed a webpage every 74 seconds. Every 108 seconds, that webpage showed a child being sexually abused.

Move the carousel to see the impact we can make together

-

£500,000

Could support mandatory yearly counselling and psychological assessments for our analysts, who do an extraordinary job. They’re highly trained to identify criminal imagery, but they’re also exposed to other content they don’t expect to see, this will assist our gold-standard welfare system.

-

£1 million

Young people are increasingly being groomed by online predators to create sexually explicit images and videos. This money could fund a one-off, life changing preventative communications campaign to keep children safe online by working with schools and parents/carers across the country.

-

£1.2 million

Could annually back our in-house software developers to create and develop cutting-edge tech-for-good tools to accurately grade and eliminate child sexual abuse images, giving our expert human analysts a technological advantage to help us stay ahead of the criminals who ruthlessly abuse children.

-

£1.5 million

Could annually sustain our ever-growing Hotline in its crucial role to remove harmful content online. Each report we process is manually assessed by our highly trained analysts to provide trusted, accurate datasets to a global network of partners and tackle the distribution of child sexual abuse material worldwide.

-

£2 million

Could establish a dedicated and transformational IWF training centre to share expertise with NGOs and law enforcement, fostering impactful collaborations in combating online child exploitation.

Impact

Every day young people are contacted online by adults who try to manipulate, groom and sexually exploit them.

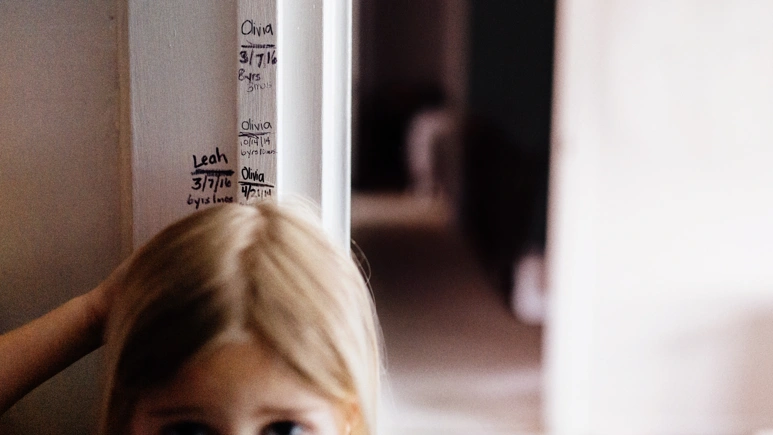

Let us talk you through Olivia's story…

In 2013 – over a decade ago – Olivia was rescued from her abuser. She was eight. Today she is 18, and an adult. But images and videos of her abuse are still shared and traded online.

Our analysts, who dedicate their time to finding and removing this imagery, have seen her grow up in pictures. They know her at three. They know her at four, five, six, seven and eight.

They know what she was made to do. In Olivia’s case, they also know that she was rescued. Not all abused children are that fortunate. They don’t know if Olivia is aware that her images are still being watched online. It’s our job to help her now.

We can’t reach into the screens and stop the abuse. But with a mission to eliminate all online child sexual abuse imagery, we aim to help all the Olivia’s out there into adulthood, regardless of whether they’re aware of us or not.

-

In 3 out of 5 images, Olivia was raped or sexually tortured.

-

There were 347 times in a 3-month period where her imagery appeared.

-

This is 5 times a day on average.

-

She was rescued by police aged 8.

-

Olivia is one child; there are thousands like her.

In 2021 we launched our highly successful Home Truths campaign which featured a scene which our analysts see day in, day out.

The rapid growth of 'self-generated' imagery is a social and digital emergency, which needs focused and sustained efforts from the Government, the tech industry, law enforcement, education and third sectors to combat it. Having the support of Omaze would significantly impact our work and help us on our mission to eliminate child sexual abuse online.